hadoop 2.4 on centos 6.5

机器情况

角色分配

角色 主机名 ip namenode master 192.168.15.194 datanode slave1 192.168.15.49 datanode slave2 192.168.15.51 硬件 4核CPU/8G内存/500G磁盘

操作系统

CentOS 6.5 64bit,安装时选择base server分区

挂载点 分区大小 / 50G /data 450G

安装前的一些设置(3台机器)

- 安装完系统后默认各网卡

onboot=no,修改为yes,重启网络

vim /etc/sysconfig/network-scripts/ifcfg-eth0

service network restart

- 修改

selinux从enforcing为disabled

vim /etc/selinux/config

- 实验环境,为方便先停掉防火墙

chkconfig iptables off

- 打开

sshd

chkconfig sshd on

- 添加hosts映射

vim /etc/hosts

# 添加

192.168.15.194 master

192.168.15.49 slave1

192.168.15.51 slave2

- 改了

selinux需要重启

reboot

- 保存下虚拟机镜像

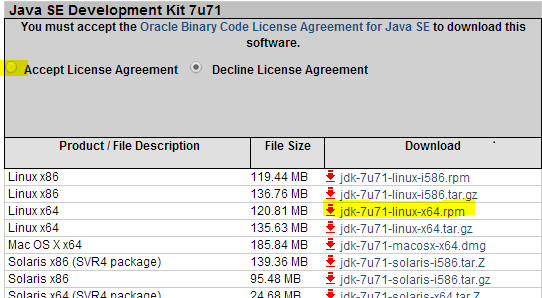

java 安装(3台机器)

- 删除默认的

openjdk(其实openjdk也是支持hadoop的)

rpm -e java-1.6.0-openjdk

rpm -e java-1.7.0-openjdk

- oracle官方jdk

- 安装

rpm -ivh jdk-7u71-linux-x64.rpm

- 设置

查看jdk的安装在哪里

rpm -ql jdk

# 安装在/usr/java/jdk1.7.0_71/

设置环境变量

vi /etc/profile

# 添加

export JAVA_HOME=/usr/java/jdk1.7.0_71/

export PATH=$JAVA_HOME/bin:$PATH

# 重新登录或者source

设置一个hadoop用户(3台机器)

# 创建一个hadoop的组

groupadd hadoop

# 创建一个hduser用户,指定为hadoop组

useradd -g hadoop hduser

# 创建hduser的用户密码

passwd hduser

master上操作:

su - hduser

# 生成key

ssh-keygen # 三次回车

# 拷贝到slave

ssh-copy-id slave1

ssh-copy-id slave2

# 试下是否可以免密码登录

ssh slave1

ssh slave2

编译hadoop

如果使用cdh或其他版本hadoop的tar包,此处可略过,本步骤是由于hadoop官方只提供了32bit的tar包,在master上编译

- 安装一些编译所需要的包

yum install -y lzo-devel zlib-devel gcc gcc-c++ cmake autoconf automake libtool ncurses-devel openssl-devel

- 安装

maven

wget http://mirrors.cnnic.cn/apache/maven/maven-3/3.2.3/binaries/apache-maven-3.2.3-bin.tar.gz

tar xvf apache-maven-3.2.3-bin.tar.gz -C /usr/local/

mv -v /usr/local/apache-maven-3.2.3/ /usr/local/maven

- 安装

ant

wget -c http://mirrors.cnnic.cn/apache/ant/binaries/apache-ant-1.9.4-bin.tar.gz

tar xvf apache-ant-1.9.4-bin.tar.gz -C /usr/local/

mv -v /usr/local/apache-ant-1.9.4/ /usr/local/ant

- 安装

protobuf此处要注意,hadoop-2.4中指定的版本为2.5,一定要用这个版本

wget -c https://protobuf.googlecode.com/files/protobuf-2.5.0.tar.gz

tar xf protobuf-2.5.0.tar.gz

cd protobuf-2.5.0

./configure

make

make install

protoc --version # 检查结果是不是libprotoc 2.5.0

- 设置环境变量

echo -e 'export MAVEN_HOME=/usr/local/maven\nexport ANT_HOME=/usr/local/ant\nexport PATH="$JAVA_HOME/bin:$MAVEN_HOME/bin:$ANT_HOME/bin:$PATH"' >> /etc/profile

source /etc/profile

- 下载

hadoop-src并编译

wget -c https://archive.apache.org/dist/hadoop/core/hadoop-2.4.0/hadoop-2.4.0-src.tar.gz

tar xf hadoop-2.4.0-src.tar.gz

cd hadoop-2.4.0-src

mvn package -DskipTests -Pdist,native -Dtar

- 编译情况(生成hadoop-2.4.0.tar.gz)

main:

[exec] $ tar cf hadoop-2.4.0.tar hadoop-2.4.0

[exec] $ gzip -f hadoop-2.4.0.tar

[exec]

[exec] Hadoop dist tar available at: /root/hadoop-2.4.0-src/hadoop-dist/target/hadoop-2.4.0.tar.gz

[exec]

[INFO] Executed tasks

[INFO]

[INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist ---

[INFO] Building jar: /root/hadoop-2.4.0-src/hadoop-dist/target/hadoop-dist-2.4.0-javadoc.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Apache Hadoop Main ................................. SUCCESS [ 1.568 s]

[INFO] Apache Hadoop Project POM .......................... SUCCESS [ 1.307 s]

[INFO] Apache Hadoop Annotations .......................... SUCCESS [ 3.005 s]

[INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.219 s]

[INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 2.081 s]

[INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 3.784 s]

[INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 2.866 s]

[INFO] Apache Hadoop Auth ................................. SUCCESS [ 3.660 s]

[INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 2.890 s]

[INFO] Apache Hadoop Common ............................... SUCCESS [02:57 min]

[INFO] Apache Hadoop NFS .................................. SUCCESS [ 27.559 s]

[INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.045 s]

[INFO] Apache Hadoop HDFS ................................. SUCCESS [05:10 min]

[INFO] Apache Hadoop HttpFS ............................... SUCCESS [01:24 min]

[INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 31.401 s]

[INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 4.100 s]

[INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.045 s]

[INFO] hadoop-yarn ........................................ SUCCESS [ 0.043 s]

[INFO] hadoop-yarn-api .................................... SUCCESS [01:33 min]

[INFO] hadoop-yarn-common ................................. SUCCESS [01:00 min]

[INFO] hadoop-yarn-server ................................. SUCCESS [ 0.033 s]

[INFO] hadoop-yarn-server-common .......................... SUCCESS [ 10.501 s]

[INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [01:17 min]

[INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 3.409 s]

[INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 20.021 s]

[INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 16.004 s]

[INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 0.509 s]

[INFO] hadoop-yarn-client ................................. SUCCESS [ 5.666 s]

[INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.033 s]

[INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 3.434 s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 2.242 s]

[INFO] hadoop-yarn-site ................................... SUCCESS [ 0.031 s]

[INFO] hadoop-yarn-project ................................ SUCCESS [ 6.757 s]

[INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.076 s]

[INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 25.510 s]

[INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 21.579 s]

[INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 3.194 s]

[INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 10.552 s]

[INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 8.453 s]

[INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 17.860 s]

[INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 2.222 s]

[INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 6.939 s]

[INFO] hadoop-mapreduce ................................... SUCCESS [ 4.609 s]

[INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 9.774 s]

[INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 23.630 s]

[INFO] Apache Hadoop Archives ............................. SUCCESS [ 2.491 s]

[INFO] Apache Hadoop Rumen ................................ SUCCESS [ 6.930 s]

[INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 4.716 s]

[INFO] Apache Hadoop Data Join ............................ SUCCESS [ 3.114 s]

[INFO] Apache Hadoop Extras ............................... SUCCESS [ 2.977 s]

[INFO] Apache Hadoop Pipes ................................ SUCCESS [ 12.205 s]

[INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 5.807 s]

[INFO] Apache Hadoop Client ............................... SUCCESS [ 9.573 s]

[INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 0.092 s]

[INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 10.650 s]

[INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 7.451 s]

[INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.028 s]

[INFO] Apache Hadoop Distribution ......................... SUCCESS [ 32.950 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 19:53 min

[INFO] Finished at: 2014-11-02T17:25:08+08:00

[INFO] Final Memory: 171M/853M

[INFO] ------------------------------------------------------------------------

安装hadoop(3台机器)

- 其实就是解压出来

tar xf /root/hadoop-2.4.0-src/hadoop-dist/target/hadoop-2.4.0.tar.gz -C /usr/local

mv /usr/local/hadoop-2.4.0 /usr/local/hadoop

chown -R hduser:hadoop /usr/local/hadoop

- 创建一些目录

mkdir -pv /data/hadoop/{tmp,data,mapred}

chown -R hduser:hadoop /data/hadoop

配置hadoop

- 先切换到

hduser启动服务等操作都要用hduser来操作,要养成这个习惯

su - hduser

- 配置环境变量

vi .bashrc

# 添加

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

- 重新登录下

exit

su - hduser

- hadoop的配置文件现在是在

$HADOOP_HOME/etc/hadoop

cd $HADOOP_HOME/etc/hadoop

- 配置

core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:54310</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>master:54311</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp</value>

</property>

</configuration>

这里要注意的是hadoop.tmp.dir这个参数,原先一直是hdfs-site.xml上设置的,但从官方的配置文件来看,默认是在core-site.xml设置的,不过不设置貌似也没有影响。

- 配置

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/data/hadoop/data</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp</value>

</property>

</configuration>

- 配置

mapred-site.xml下载官方的配置模板

wget http://hadoop.apache.org/docs/r2.4.0/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

mv -v mapred-default.xml mapred-site.xml

修改一处(默认是local)

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- 配置

yarn-site.xml

wget http://hadoop.apache.org/docs/r2.4.0/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

mv -v yarn-default.xml yarn-site.xml

修改几处地方

<property>

<description>The hostname of the RM.</description>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

配置这个值主要是原先的${yarn.log.dir}是没有设置的,为了调试下面所提到的问题才设置的

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/tmp/userlogs</value>

</property>

默认这个值是没有设置的,执行yarn测试hadoop的时候会出现如下报错

WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Failed to launch container.

java.io.FileNotFoundException: File /data/hadoop/tmp/nm-local-dir/usercache/hduser/appcache/application_1415026555550_0002/container_1415026555550_0002_01_000001 does not exist

<property>

<description>the valid service name should only contain a-zA-Z0-9_ and can not start with numbers</description>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<!--<value>mapreduce_shuffle</value>-->

</property>

- 配置

hadoop-env.sh修改

export JAVA_HOME=/usr/java/jdk1.7.0_71

修改 (此处主要是不加后面的java.library.path)

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true -Djava.library.path=/usr/local/hadoop/lib/native"

上面的修改是为了解决下面的问题:(export HADOOP_ROOT_LOGGER=DEBUG,console 再执行hadoop的命令就可看到调试信息)

14/11/03 09:12:31 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

14/11/03 09:12:31 DEBUG util.NativeCodeLoader: Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

14/11/03 09:12:31 DEBUG util.NativeCodeLoader: java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

14/11/03 09:12:31 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicabl

# 修改后的调试信息

14/11/03 09:41:51 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

14/11/03 09:41:51 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library*

- 配置

yarn-env.sh

# 修改,修改原因同上

YARN_OPTS="$YARN_OPTS -Dyarn.policy.file=$YARN_POLICYFILE -Djava.library.path=/usr/local/hadoop/lib/native"

- 配置

masters和slaves

echo "master" > masters

vim slaves

# 删除localhost,添加如下

slave1

slave2

- 在

master上修改完以上的文件,再同步到slave1和slave2

scp masters slave1:/usr/local/hadoop/etc/hadoop

scp slaves slave1:/usr/local/hadoop/etc/hadoop

scp hadoop-env.sh slave1:/usr/local/hadoop/etc/hadoop

scp yarn-env.sh slave1:/usr/local/hadoop/etc/hadoop

scp yarn-site.xml slave1:/usr/local/hadoop/etc/hadoop

scp mapred-site.xml slave1:/usr/local/hadoop/etc/hadoop

scp hdfs-site.xml slave1:/usr/local/hadoop/etc/hadoop

scp core-site.xml slave1:/usr/local/hadoop/etc/hadoop

scp /home/hduser/.bashrc slave1:/home/hduser/.bashrc

scp masters slave2:/usr/local/hadoop/etc/hadoop

scp slaves slave2:/usr/local/hadoop/etc/hadoop

scp hadoop-env.sh slave2:/usr/local/hadoop/etc/hadoop

scp yarn-env.sh slave2:/usr/local/hadoop/etc/hadoop

scp yarn-site.xml slave2:/usr/local/hadoop/etc/hadoop

scp mapred-site.xml slave2:/usr/local/hadoop/etc/hadoop

scp hdfs-site.xml slave2:/usr/local/hadoop/etc/hadoop

scp core-site.xml slave2:/usr/local/hadoop/etc/hadoop

scp /home/hduser/.bashrc slave2:/home/hduser/.bashrc

格式化hdfs(master)

su - hduser

hadoop namenode -format

注意,如果之前有重复格式化的情况,可能会出现如下的报错

2014-11-03 22:04:30,570 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for block pool Block pool <registering> (Datanode Uuid unassigned) service to maste

r/192.168.15.194:9001

java.io.IOException: Incompatible clusterIDs in /data/hadoop/tmp/dfs/data: namenode clusterID = CID-4ffa87fa-c743-43fd-bbdb-4ff0c53d8906; datanode clusterID = CID-fcbba7bd-0d64-45dc-b80a-d3e382807a69

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:472)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:225)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:249)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:929)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:900)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:274)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:220)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:815)

at java.lang.Thread.run(Thread.java:745)

2014-11-03 22:04:30,573 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid unassigned) service to master/192.168.15.194:9001

2014-11-03 22:04:30,576 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Block pool ID needed, but service not yet registered with NN

java.lang.Exception: trace

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.getBlockPoolId(BPOfferService.java:143)

at org.apache.hadoop.hdfs.server.datanode.BlockPoolManager.remove(BlockPoolManager.java:91)

at org.apache.hadoop.hdfs.server.datanode.DataNode.shutdownBlockPool(DataNode.java:859)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.shutdownActor(BPOfferService.java:350)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.cleanUp(BPServiceActor.java:619)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:837)

at java.lang.Thread.run(Thread.java:745)

2014-11-03 22:04:30,576 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool <registering> (Datanode Uuid unassigned)

2014-11-03 22:04:30,577 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Block pool ID needed, but service not yet registered with NN

java.lang.Exception: trace

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.getBlockPoolId(BPOfferService.java:143)

at org.apache.hadoop.hdfs.server.datanode.DataNode.shutdownBlockPool(DataNode.java:861)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.shutdownActor(BPOfferService.java:350)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.cleanUp(BPServiceActor.java:619)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:837)

at java.lang.Thread.run(Thread.java:745)

2014-11-03 22:04:32,577 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode

2014-11-03 22:04:32,580 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 0

2014-11-03 22:04:32,582 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at slave1/192.168.15.49

************************************************************/

需要先清空core-site.xml中hadoop.tmp.dir所设置的目录下面的内容(保留目录),比如这里是

mv -v /data/hadoop/tmp/* /data/backup/tmp

启动hadoop和yarn(master)

su - hduser

cd $HADOOP_HOME/sbin

./start-dfs.sh

./start-yarn.sh

日志默认在$HADOOP_HOME/logs中,这里应该重新设置到/data/下面较好

测试hadoop和yarn是否正常(master)

- 查看hdfs状态

su - hduser

hdfs dfsadmin -report

输出如下:

Configured Capacity: 948247920640 (883.12 GB)

Present Capacity: 899919179776 (838.12 GB)

DFS Remaining: 899914633216 (838.11 GB)

DFS Used: 4546560 (4.34 MB)

DFS Used%: 0.00%

Under replicated blocks: 6

Blocks with corrupt replicas: 0

Missing blocks: 0

Datanodes available: 2 (2 total, 0 dead)

Live datanodes:

Name: 192.168.15.51:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 474123960320 (441.56 GB)

DFS Used: 2273280 (2.17 MB)

Non DFS Used: 24164368384 (22.50 GB)

DFS Remaining: 449957318656 (419.06 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.90%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Tue Nov 04 14:40:17 CST 2014

Name: 192.168.15.49:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 474123960320 (441.56 GB)

DFS Used: 2273280 (2.17 MB)

Non DFS Used: 24164372480 (22.50 GB)

DFS Remaining: 449957314560 (419.06 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.90%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Tue Nov 04 14:40:16 CST 2014

- 相关端口以及登录页面

master:8088master:50070 - 执行

yarn测试程序

yarn jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.4.0.jar pi 16 1000000

输出如下:

Number of Maps = 16

Samples per Map = 1000000

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Wrote input for Map #10

Wrote input for Map #11

Wrote input for Map #12

Wrote input for Map #13

Wrote input for Map #14

Wrote input for Map #15

Starting Job

14/11/04 14:44:22 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.15.194:8032

14/11/04 14:44:23 INFO input.FileInputFormat: Total input paths to process : 16

14/11/04 14:44:23 INFO mapreduce.JobSubmitter: number of splits:16

14/11/04 14:44:24 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1415029529475_0004

14/11/04 14:44:24 INFO impl.YarnClientImpl: Submitted application application_1415029529475_0004

14/11/04 14:44:24 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1415029529475_0004/

14/11/04 14:44:24 INFO mapreduce.Job: Running job: job_1415029529475_0004

14/11/04 14:44:31 INFO mapreduce.Job: Job job_1415029529475_0004 running in uber mode : false

14/11/04 14:44:31 INFO mapreduce.Job: map 0% reduce 0%

14/11/04 14:44:47 INFO mapreduce.Job: map 19% reduce 0%

14/11/04 14:44:48 INFO mapreduce.Job: map 38% reduce 0%

14/11/04 14:44:51 INFO mapreduce.Job: map 44% reduce 0%

14/11/04 14:44:52 INFO mapreduce.Job: map 88% reduce 0%

14/11/04 14:44:54 INFO mapreduce.Job: map 100% reduce 0%

14/11/04 14:44:56 INFO mapreduce.Job: map 100% reduce 100%

14/11/04 14:44:56 INFO mapreduce.Job: Job job_1415029529475_0004 completed successfully

14/11/04 14:44:56 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=358

FILE: Number of bytes written=1569687

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=4214

HDFS: Number of bytes written=215

HDFS: Number of read operations=67

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=16

Launched reduce tasks=1

Data-local map tasks=16

Total time spent by all maps in occupied slots (ms)=237380

Total time spent by all reduces in occupied slots (ms)=6042

Total time spent by all map tasks (ms)=237380

Total time spent by all reduce tasks (ms)=6042

Total vcore-seconds taken by all map tasks=237380

Total vcore-seconds taken by all reduce tasks=6042

Total megabyte-seconds taken by all map tasks=243077120

Total megabyte-seconds taken by all reduce tasks=6187008

Map-Reduce Framework

Map input records=16

Map output records=32

Map output bytes=288

Map output materialized bytes=448

Input split bytes=2326

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=448

Reduce input records=32

Reduce output records=0

Spilled Records=64

Shuffled Maps =16

Failed Shuffles=0

Merged Map outputs=16

GC time elapsed (ms)=1984

CPU time spent (ms)=14100

Physical memory (bytes) snapshot=4286451712

Virtual memory (bytes) snapshot=14933258240

Total committed heap usage (bytes)=3313500160

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1888

File Output Format Counters

Bytes Written=97

Job Finished in 33.734 seconds

Estimated value of Pi is 3.14159125000000000000

Failed to launch container 调试记录

问题:执行yarn测试hadoop部署情况:

/usr/local/hadoop/bin/yarn jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.4.0.jar pi 16 10000

datanode报错,无法执行mapreduce

2014-11-03 22:59:08,108 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Failed to launch container.

java.io.FileNotFoundException: File /data/hadoop/tmp/nm-local-dir/usercache/hduser/appcache/application_1415026555550_0002/container_1415026555550_0002_01_000001 does not exist

调试过程

1. 这个链接显示hadoop.tmp.dir应该在core-site.xml配置,修改了没起作用,参考这个链接

2. 直接下载默认配置文件只进行修改一处:yarn.resourcemanager.hostname,情况还是一样

3. 修改yarn-site.xml,yarn.nodemanager.log-dirs由${yarn.log.dir}/userlogs修改为 /tmp/userlogs;yarn.timeline-service.leveldb-timeline-store.path由${yarn.log.dir}/timeline修改为/tmp/timeline,这次终于在终端打印出很多重复的错误日志:

14/11/03 23:41:40 INFO mapreduce.Job: Task Id : attempt_1415029145516_0002_m_000005_2, Status : FAILED

Container launch failed for container_1415029145516_0002_01_000043 : org.apache.hadoop.yarn.exceptions.InvalidAuxServiceException: The auxService:mapreduce_shuffle does not exist

- 根据上面的关键字,找到了这个网页,根据上面的提示,看到

yarn-site.xml中yarn.nodemanager.aux-services默认设置确实是空的,于是修改为mapreduce_shuffle,问题解决了

zookeeper部署(3台机器)

- 下载和安装

wget -c http://mirror.tcpdiag.net/apache/zookeeper/stable/zookeeper-3.4.6.tar.gz

tar xf zookeeper-3.4.6.tar.gz -C /usr/local/

ln -sv /usr/local/zookeeper-3.4.6 /usr/local/zookeeper

- 创建必要的目录

mkdir -pv /data/{zookeeper,logs}

chown -R hduser:hadoop /data/zookeeper

chown -R hduser:hadoop /data/logs

- 配置环境变量

su - hduser

vim ~/.bashrc

# 添加

export ZOOKEEPER_PREFIX=/usr/local/zookeeper

export ZOO_LOG_DIR=/data/logs

ZOO_LOG_DIR不设置,则zkServer.sh中的这个语句_ZOO_DAEMON_OUT="$ZOO_LOG_DIR/zookeeper.out"会导致没权限从而启动失败

* 配置zookeeper

# 新建一个zoo.cfg

vim /usr/local/zookeeper/conf/zoo.cfg

# 添加

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

- 在

dataDir指定的目录下设置myid

# master

echo 1 > /data/zookeeper/myid

# slave1

echo 2 > /data/zookeeper/myid

# slave2

echo 3 > /data/zookeeper/myid

- 启动

/usr/local/zookeeper/bin/zkServer.sh start

- 检查zookeeper的状态

echo 'ruok' | nc master 2181

echo 'ruok' | nc slave1 2181

echo 'ruok' | nc slave2 2181

# 推荐使用zktop

python zktop.py -c /usr/local/zookeeper/conf/zoo.cfg

hbase部署(3台机器)

角色分配

| 角色 | 主机名 | ip |

| HMaster | master | 192.168.15.194 |

| HRegionServer | slave1 | 192.168.15.49 |

| HRegionServer | slave2 | 192.168.15.51 |

- 下载和安装

wget -c http://mirrors.cnnic.cn/apache/hbase/hbase-0.98.8/hbase-0.98.8-hadoop2-bin.tar.gz

tar xvf hbase-0.98.8-hadoop2-bin.tar.gz -C /usr/local

chown -R hduser:hadoop /usr/local/hbase-0.98.8-hadoop2/

ln -sv /usr/local/hbase-0.98.8-hadoop2/ /usr/local/hbase

- 创建必要的目录

mkdir -p /data/hbase

chown -R hduser:hadoop /data/hbase

- 配置环境变量

su - hduser

vim /usr/local/hbase/conf/hbase-env.sh

# 添加

export JAVA_HOME=/usr/java/jdk1.7.0_71

export HBASE_MANAGES_ZK=false

vim /usr/local/hbase/conf/hbase-site.xml

# 添加配置如下:

<configuration>

<property>

<name>hbase.tmp.dir</name>

<value>/data/hbase</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:54310/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data/zookeeper</value>

</property>

</configuration>

export HBASE_MANAGES_ZK=false这个配置是使用已有的zookeeper,而不是使用hbase自带的zookeeper(/usr/local/hbase/lib/zookeeper-3.4.6.jar)

hbase.rootdir这里需要使用$HADOOP_HOME/etc/hadoop/core-site.xml中的fs.default.name中的配置

hbase.zookeeper.property.clientPort默认为2181,可以不配置

hbase.zookeeper.property.dataDir为/usr/local/zookeeper/conf/zoo.cfg中dataDir的值

* 拷贝到其他节点

scp -r /usr/local/hbase-0.98.8-hadoop2/ slave1:/usr/local

scp -r /usr/local/hbase-0.98.8-hadoop2/ slave2:/usr/local

- 添加regionservers (在master上)

vim /usr/local/hbase/conf/regionservers

# 添加

slave1

slave2

- 启动 master上执行

su - hduser

cd /usr/local/hbase/bin

./start-hbase.sh

- 检查

jps

master

28 Jps

2021 SecondaryNameNode

6657 HMaster

2222 ResourceManager

2609 QuorumPeerMain

1830 NameNode

slave1

4522 Jps

2594 QuorumPeerMain

4185 HRegionServer

1639 DataNode

1757 NodeManager

slave2

1643 DataNode

3900 Jps

1760 NodeManager

2424 QuorumPeerMain

3700 HRegionServer

看是否能进入hbase shell

cd /usr/local/hbase/bin

./hbase shell

进入后执行list看看有没有报错

opentsdb部署(master)

部署opentsdb主要是为了验证zookeeper和hbase

root用户执行命令

* 下载和安装

wget -c https://github.com/OpenTSDB/opentsdb/releases/download/v2.0.0/opentsdb-2.0.0.noarch.rpm

yum install gnuplot

rpm -ivh opentsdb-2.0.0.noarch.rpm

- 配置

vim /usr/share/opentsdb/etc/opentsdb/opentsdb.conf

# 修改

tsd.storage.hbase.zk_quorum = master:2181,slave1:2181,slave2:2181

- 初始化

cd /usr/share/opentsdb/tools/

env COMPRESSION=NONE HBASE_HOME=/usr/local/hbase ./src/create_table.sh

- 启动

vim /usr/bin/tsdb

# tsdb找不到java,需要修改这个文件

JAVA=/usr/java/jdk1.7.0_71/bin/java

/etc/init.d/opentsdb start

# 创建metric

tsdb mkmetric <metric>

- 客户端

安装

tcollector过程略